This blog entry is the first entry in Japan JavaFX Advent Calendar 2012.

I made a presentation about multi-touch support in JavaFX at 8th Japan JavaFX workshop. I write additional information for the presentation in this entry.

Presentation material is below.

Developing multi-touch application in JavaFX from Takashi Aoe

And source code of sample application is below.

https://gist.github.com/4143183

Additional information for multi-touch API in JavaFX

JavaFX2.2 introduces API related multi-touch. I was very surprised when Developer Preview released because the description of the API has been added to JavaDoc casually without notification in release notes.

Multi-touch support is described as follows in the entry entitled "What's new in JavaFX 2.2" in The JavaFX Blog.

Multi-touch support for touch-enabled devices. As of today this is mostly relevant for desktop-class touch screen displays and touch pads, this will enable the adoption of sophisticated UIs on embedded devices running Java SE Embedded on ARM-based chipsets, such as kiosks, telemetry systems, healthcare devices, multi-function printers, monitoring systems, etc. This is a segment of the Java application market that is usually overseen by most application developers, but that is thriving.

I think main target of multi-touch support is embedded devices. It's my opinion, Oracle is probably going to be taking the initiative in the development of embedded devices user interface before Android move into the same domain.

Of course, I think it is intended for the development of iOS/Android/WindowsPhone/WindowsRT application.

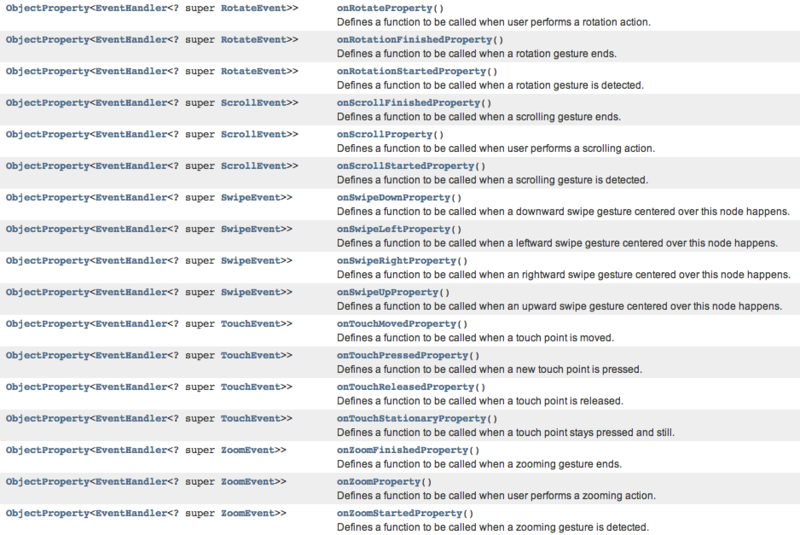

As I explained in the workshop, TouchEvent handling the basic touch operation and GestureEvent handling more abstract events are added to javafx.scene.input package.

And properties to register a handler for these events are added to Scene and Node.

The following is a list of items I didn't speak for a time limit in workshop.

- About TouchEvent

- If you touch multiple points, eventSetId is allocated to individual Event.

- Ctrl, Alt, Meta and Shift key combination can be detected.

- It implements copyFor() method that copy event to other nodes.

- In order to get the number of touch points, use getTouchCount() method.

- TouchPoint class stores the information of touch point. It implements following methods.

- getX()/getY() method to get the position of the touch point relative to the origin of the event source.

- getSceneX()/getSceneY() method to get the position of the touch point relative to the origin of the Scene.

- getScreenX()/getScreenY() method to get the absolute position of the touch point on screen.

- There is a grabbing API to modify event target and you can switch by using grab() method ... I guess. (Sorry, I have not investigated enough.)

- About RotateEvent

- If the trackpad supports multi-touch, you can fire this event on the trackpad. When the event delivered on the trackpad, the mouse cursor location is used as the gesture coordinates.

- About ScrollEvent

- You can fire this event by mouse wheel. But only SCROLL event is delivered.

- Use the deltaX/deltaY property to obtain the amount of scroll usually, but use the textDeltaX/textDeltaY property when the text-based component. The textDeltaXUnits()/textDeltaYUnits() method determine how to interpret the textDeltaX and textDeltaY values.

- In order to get the number of touch points, use getTouchCount() method. You can implement multi-finger scrolling by using this.

Additonal information for sample application

I showed three samples in workshop. There are videos that had been recorded in preparation to the failure of the demonstration.

- TouchEvent sample. Draw a circle on touch point. The circle can be moved with a touch and can be erased with a double tap.

- Demonstration of Scroll/Rotate/ZoomEvent. I demonstrated you can move, transform the Rectangle object easily by using the values obtained from these events.

- Demonstration of scrolling support for JavaFX control. I used ListView control and Pagination control introduced in JavaFX2.2. These controls supports scrolling operation without any coding.

I wrote compressed souece code in the presentation material because of space limitation. So I hope you check the source code uploaded to gist.

I describe below additional explanation.

- Node class has properties scaleX/Y, rotate, translateX/Y for translation or transformation. Movement amount obtained from GestureEvent can be applied directly to these properties.

- I set a list of font families supported by the system obtained from javafx.scene.text.Font.getFamilies() method to Pagination and ListView control.

- The contents of the page displayed in Pagination control are Labels in VBox control as follows. Pagination control is my favorite because its API is performance-friendly.

@Override public void initialize(URL url, ResourceBundle rb) { fonts = Font.getFamilies(); pagination.setPageCount(fonts.size() / FONTS_PER_PAGE); pagination.setPageFactory(new Callback<Integer, Node>() { @Override public Node call(Integer idx) { return createPage(idx); } }); lvFonts.setItems(FXCollections.observableArrayList(fonts)); } private VBox createPage(int idx) { VBox box = new VBox(5.0); int page = idx * FONTS_PER_PAGE; for (int i = page; i < page + FONTS_PER_PAGE; i++) { Label lblFont = new Label(fonts.get(i)); box.getChildren().add(lblFont); } return box; }

As you see, API that enables to develop multi-touch application easily have been added in JavaFX2.2. Unfortunately, fully available environment is Windows8 devices only (furthermore, only in desktop mode), Let's use everyone!